Introducing AI Cloud Services Powered by DDN

AI Cloud Services Powered by DDN remove the barriers to getting your AI off the ground. We’ve already done the integration and performance engineering with the cloud providers, so you can focus on building and not on infrastructure. Just choose the service and go. And because it’s Powered by DDN, you get the same high-throughput, efficient, at-scale performance trusted by the world’s top AI platforms. The data platform you choose matters — ask for Powered by DDN.

Choose Your AI Cloud

The Powered by DDN Difference

When you choose an AI cloud service Powered by DDN, you’re choosing the performance foundation behind the world’s most advanced AI platforms. This is what accelerates your AI from idea to production, without compromise.

Accelerate AI Deployment Across Data Centers and Clouds

With integrated orchestration, validation, and portability customers experience predictable, high-performance AI anywhere that is simple to deploy and effortless to scale.

Hybrid Consistency Cuts Risk

Identical performance ensures that models trained on-prem will behave the same in the cloud, minimizing risk and avoiding costly tuning or troubleshooting. This consistency improves reliability for production deployments and supports hybrid scaling strategies.

Faster ROI for Accelerated AI

Accelerate AI success with 3× faster time to first model and achieve 2× ROI in just 90 days. Reduce delays, cut costs, and move from concept to production in record time.

High-Performance AI Training at Scale

Powered by DDN EXAScaler

Google Cloud’s first-party Managed Lustre service is built on DDN EXAScaler, delivering top-tier data throughput for enterprise-scale and multi-node training.

Key Advantages:

- Extreme training throughput for large-scale models

- Up to 15× faster checkpointing

- Faster model and dataset loading

- Balanced I/O for mixed AI workloads

- Validated with NVIDIA AIDP and next-generation GPU fabrics

- Integrated with GCE, GKE, Vertex AI, and TPU/GPU fleets

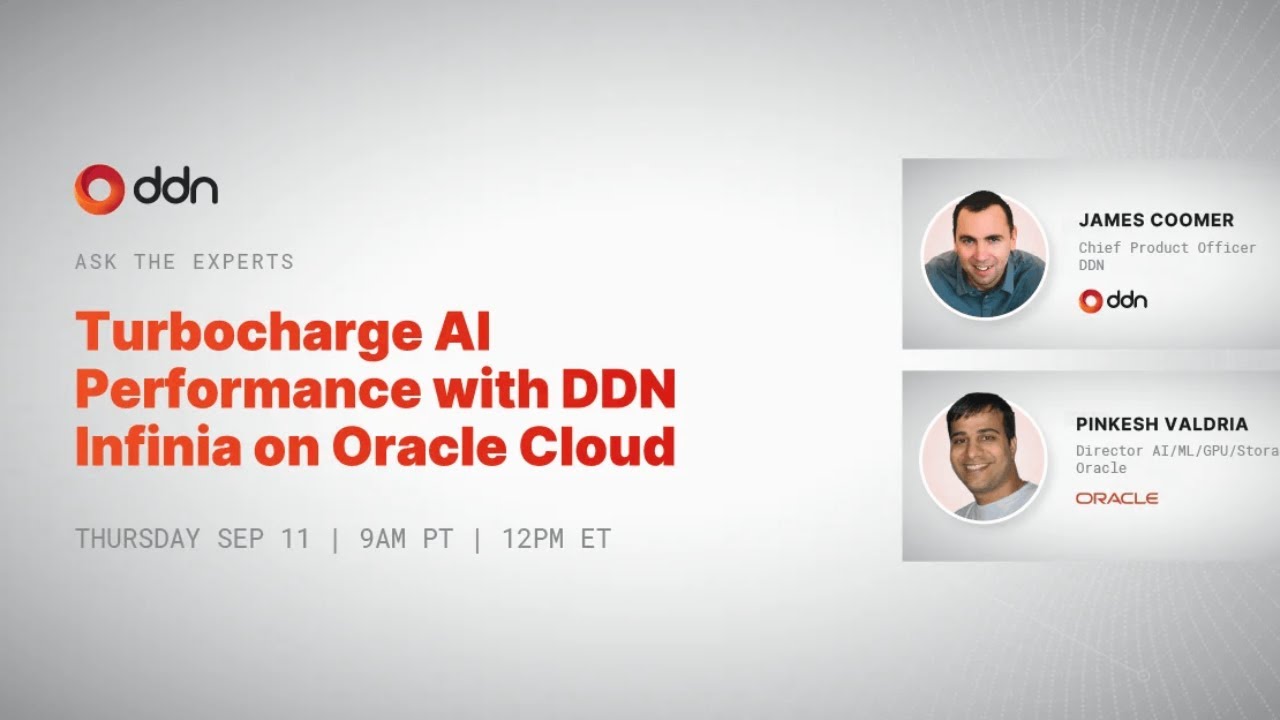

Production-Grade Inference & RAG

Powered by DDN Infinia

DDN Infinia on OCI delivers low-latency inference, efficient KV caching, and high concurrency for real-time RAG, agentic AI, and interactive AI services.

Key Advantages:

- Low-latency data serving for inference and RAG

- KV caching and token reuse for faster responses

- Supports longer prompts and more concurrent sessions

- Elastic scaling for dynamic workloads

- Enterprise durability and multi-tenancy

- Ideal for chatbots, RAG pipelines, agentic AI, and API-driven inference

High-Performance GPU Clouds for Real AI Workloads

A growing ecosystem of GPU cloud platforms run on DDN’s data engine, providing fast, predictable performance. Whether you’re running large-scale AI workloads, training cutting-edge models, or building production-ready applications, our GPU clouds Powered by DDN offer the speed, reliability, and flexibility you need; wherever you are.

GPU Supercomputers for Leading AI Labs

We are your infrastructure team – we deploy and operate your cluster so you can focus on building world-class models.

Countries Served: Worldwide

Driving Digital Transformation

With AI as a key focus, FPT has been integrating AI across its products and solutions to drive innovation and enhance user experiences.

Countries Served: Vietnam, Japan

The AI Developer Cloud

On-demand NVIDIA GPU instances & clusters for AI training & inference.

Countries Served: United States

GPU Merdeka: Solution for AI Nation

Accelerate development of Indonesian AI nation and achieve sovereign AI and ensure data security with no egress policy.

Countries Served: Indonesia

Connecting to Hyperscale

NAVER Cloud is for every business and offers the technological foundation for your success.

Countries Served: South Korea

The Ultimate Cloud for AI Explorers

The most efficient way to build, tune and run your AI models and applications on top-notch NVIDIA GPUs.

Countries Served: Netherlands, United States

Unlock the Full Potential of Your Business

Innovative solutions to streamline your productivity, improve customer experience and save on costs.

Countries Served: United Arab Emirates

Europe’s Leading Cloud Provider

Build, train, deploy and scale AI models on a resilient, sovereign, and sustainable infrastructure.

Region Served: Europe

Fastest Cutting-edge Infrastructure

Designed for large-scale, GPU-accelerated workloads, SIAM.AI offers unmatched access to a wide array of computing solutions, with up to 35X faster speeds and 80% lower costs.

Countries Served: Thailand

Global Scale Cloud Infrastructure

High-performance cloud computing and Cloud GPU for AI/ML training and inference, AR/VR, HPC, and more—fast, scalable, and cost-effective.

Countries Served: Worldwide

Not Sure Which AI Cloud Is Right for You?

Our experts can help you evaluate training, inference, and GPU cloud options — and recommend the best DDN-powered path for your workload.

Contact Us

It means the cloud service uses DDN’s high-performance data engine, giving you the same throughput, speed, and efficiency used in leading AI platforms. This improves training, inference, and RAG performance.

AI models depend heavily on data throughput. DDN eliminates bottlenecks with fast data pipelines, enabling teams to run training and inference workloads at full speed with less tuning and less infrastructure overhead.

– Fastest data paths for AI workloads

– Faster iteration cycles and reduced time to production

– Efficient scaling for training, inference, and RAG

– Hybrid consistency across on-prem and cloud

– Validated architectures aligned to major AI platforms

– Lower cost per model, per iteration, and per token due to higher efficiency

Google Cloud and Oracle Cloud offer integrated DDN services for training and inference. Additional GPU cloud providers including CoreWeave, Lambda, Vultr, Scaleway, and Northern Data run on DDN-powered performance architectures.

Yes. DDN EXAScaler accelerates training performance, while DDN Infinia accelerates inference and RAG workloads. Both are available through cloud partners and GPU cloud providers.

DDN handles the performance engineering and integrations with cloud providers upfront. This removes infrastructure setup and tuning, allowing teams to launch AI workloads faster and scale without bottlenecks.